Whenever there is a talk of infusing technology, automation and intelligence in to tasks or processes, the solutions proposed fall in to two broad buckets. The first is the conventional use of the term Artificial intelligence, machine learning, deep learning and cognitive learning, which typically work outside human intervention and are largely driven by some kind of an algorithm to achieve its goal. The handling of regular transactions as well as any exceptions are defined within the AI program and the machine doing the job in case of touch and feel jobs like manufacturing or the algorithm producing the result in case of purely online work. The second category is where Artificial Intelligence is applied to work in conjunction with the humans and human intervention and interaction is a critical part of the successful completion of the job. This second type is known as Augmented intelligence or Augmented reality.

Another way of looking at what happens with augmented and artificial intelligence is the degree to which algorithms and the programs running the physical machines are expected to make decisions on their own. Is the decision making assisted by humans, controlled by humans or completely out of control of humans?

It’s critical to note that while the technologies and fundamentals powering both Artificial intelligence and Augmented intelligence are largely the same, the applications, goals and objectives are objectively different. Simply put, AI creates a human less environment while Augmented Intelligence or Intelligence Augmented as it’s often called, seeks to create an environment for betterment of humans and human endeavors.

There’s virtually no major industry where modern AI — more specifically, “narrow AI,” which performs objective functions using data-trained models and often falls into the categories of deep learning or machine learning — hasn’t already affected. That’s especially true in the past few years, as data collection and analysis has ramped up considerably thanks to robust IoT connectivity, the proliferation of connected devices and ever-speedier computer processing.

- In Manufacturing: AI powered robots work alongside humans to perform a limited range of tasks like assembly and stacking, and predictive analysis sensors keep equipment running smoothly.

- Healthcare: In the comparatively AI-nascent field of healthcare, diseases are more quickly and accurately diagnosed, drug discovery is sped up and streamlined, virtual nursing assistants monitor patients and big data analysis helps to create a more personalized patient experience.

- Education: Textbooks are digitized with the help of AI, early-stage virtual tutors assist human instructors and facial analysis gauges the emotions of students to help determine who’s struggling or bored and better tailor the experience to their individual needs.

- Media: Journalism is harnessing AI, too, and will continue to benefit from it. Bloomberg uses Cyborg technology to help make quick sense of complex financial reports. The Associated Press employs the natural language abilities of Automated Insights to produce 3,700 earning reports stories per year — nearly four times more than in the recent past.

- Customer Service: Last but hardly least, Google is working on an AI assistant that can place human-like calls to make appointments at, say, your neighborhood hair salon. In addition to words, the system understands context and nuance.

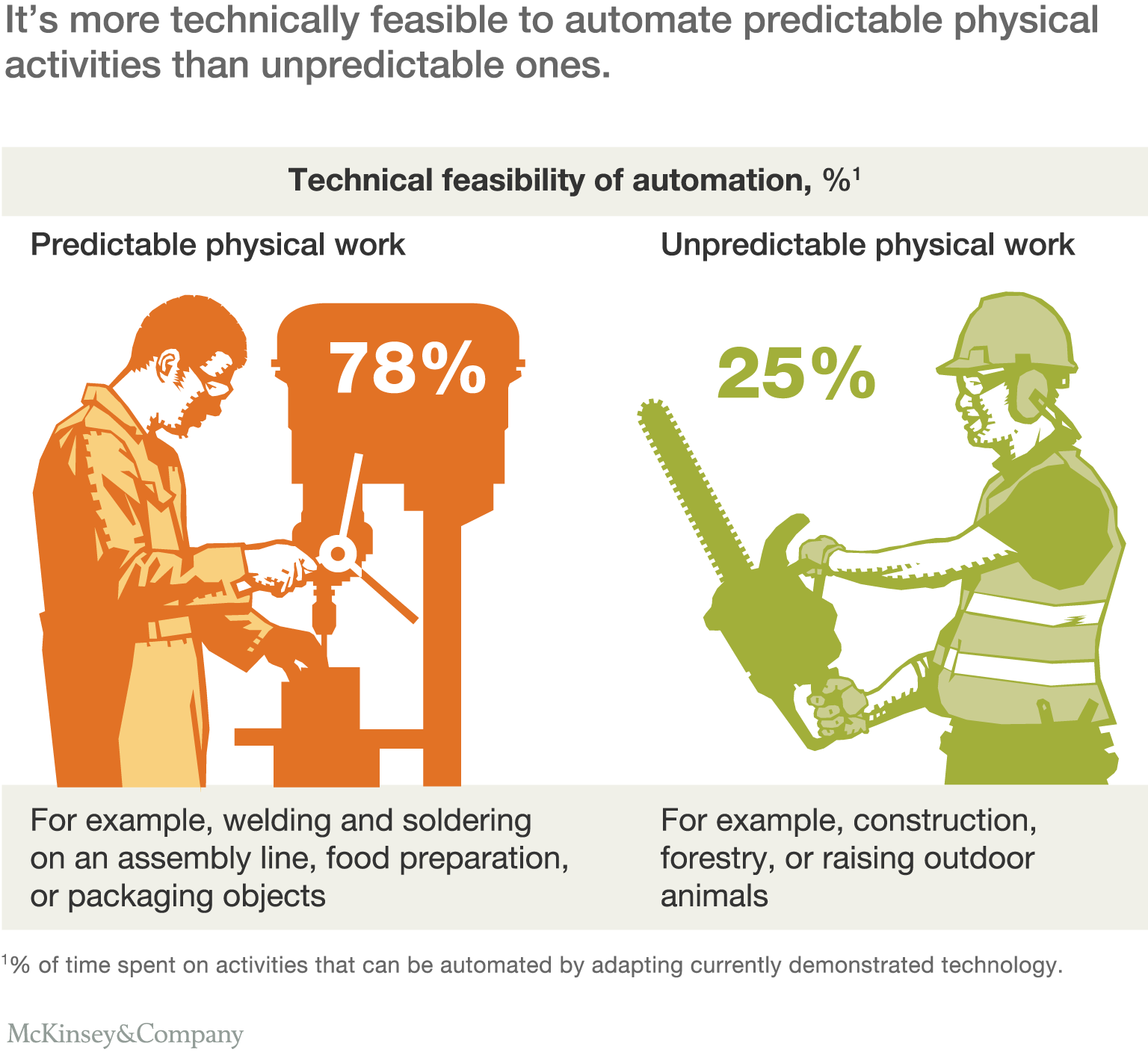

Source: McKinsey & Company

According to a recent publication, of the 9,100 patents received by IBM inventors in 2018, 1,600 (or nearly 18 percent) were AI-related. Here’s another: Tesla founder and tech titan Elon Musk recently donated $10 million to fund ongoing research at the non-profit research company OpenAI — a mere drop in the proverbial bucket if his $1 billion co-pledge in 2015 is any indication. And in 2017, Russian president Vladimir Putin told school children that “Whoever becomes the leader in this sphere [AI] will become the ruler of the world.” He then tossed his head back and laughed maniacally.

The single biggest strength of Artificial intelligence is the simple fact that it can do repetitive tasks better than anything else. And the more quantitative, the more objective the job is—separating things into bins, washing dishes, picking fruits and answering customer service calls—those are very much scripted tasks that are repetitive and routine in nature. In the matter of five, 10 or 15 years, they will be displaced by AI.

Yet even the most pragmatic AI scientists stress that today’s AI is useless in two significant ways: it has no creativity and no capacity for compassion or love. Rather, it’s “a tool to amplify human creativity.” In other words, sure you can teach AI to make strokes with a brush in a canvas, but an AI driven machine can never in a million years paint a Monalisa.

The most advanced robot costing many millions in research dollars and years of work, cannot pickup a tea cup like a 2 year old can. Or millions of sensors on a robot cannot feel taste and smell like humans do. Sure a robot Make millions of calculations in a second, which is many hundreds of times more than the most intelligent human can, sure a robot can discern each smell, or can break down each material in to its atoms and sub-atoms, but it cannot experience the same thoughts, emotions and feelings that human brain can.

The second biggest fear around AI stems from the AI and machine learning applications inheriting some of the human biases based on pre-disposed notions and behaviors that humans may code in to or indirectly influence the program.

Augmented Intelligence on the other hand doesn’t replace humans with technology rather uses Artificial intelligence to aid humans in doing a job.

In recent years, in AI technology rankings in terms of the value they create for businesses, Augmented Intelligence was ranked in second place, just below virtual agents. However, Gartner predicts that “Decision support and AI augmentation will surpass all other types of AI initiatives” creeping into first place this year and then exploding as we reach 2025 becoming around twice as valuable as virtual agents.

Just like regular project management work, any organization looking to employ AI needs to first define its use cases and requirements clearly and describe the Big Y, or the big problem it’s trying to solve. Then, they need to define the various aspects of business goals, or the product that they want to build. The next step is to outline data requirements and functionality to solve those business goals. And finally, what’s the ROI or returns the organizations expects. What makes AI and machine or cognitive learning projects unique is that they also need to consider the human-machine dynamic. For example, which part of the chain or which components of the product or solution do they want to hand over entirely to machines to execute, and which parts do they want to retain for their Human Resources? Where machine or a computer algorithm based program is making the decision, is it completely autonomous or is there a human there to monitor? Is The machine or computer program Only responsible to feed data, information or half finished product to human to make the final decisions? In the scope of these decisions, then, it makes a lot more sense to create an AI role matrix which can evolve over time. This matrix Lays down specifically the kind of role AI or cognitive learning system will play for that particular function or process.

Source: USM Business Systems

Getting to true autonomous intelligence or fully independent, cognitive learning powered AI model is proving to be real difficult. Even one single instance of non-compliance or faulty decision making can throw the entire program off tracks.

During Tesla’s 2019 Autonomy Day, Tesla CEO Elon Musk said the company is expected to have one million vehicles on the road by the end of 2020 that could function as robotaxis. Though the semi-autonomous Autopilot and Full Self-Driving, or FSD, features are loved by some, others say Musk’s driverless dream is far from becoming a reality. Despite many hundreds of millions in investment and almost a decade of efforts, Tesla’s fully autonomous driving mode is not fully autonomous anymore rather in the best-case scenario is now only viewed as a partial augmented driving mode. In other words, human driver has to control the inputs. And while some of the Tesla’s self-driving features are loved by some, many still view it as unfit for our roads. While the hype around the autonomous driving mode was skyrocketing, and just as Tesla started charging a hefty premium for their self-driving feature, a handful of incidents and accidents changed all that. Scenarios of false-positives, or false-negatives, which are built in to the algorithms powering the programs behind the autonomous driving software, lead to further complications of their own, creating as many problems as they solve. In the best-case scenario, public confidence in self-driving technology is still many years if not decades away.

Source: ABC News

Watching the Youtube training sessions posted by Waymo, the Alphabet subsidiary in charge of self-driving cars, reveals the concerns with self-driving technology. One video shows a car that repeatedly and without reason stops in the middle of a street and then drives off again. The explanation came from a passer-by who was carrying a STOP sign sticking out of his bag, misleading the vehicle. In other words, the machine can drive itself, but it lacks the ability to differentiate ‘stop’ signal in different contexts and nuances of incidents on public roads.

The loan underwriters at top banks quickly realized that leaving decision making with a compute program is a recipe for disaster as the program has inbuilt biases and no amount of learning can make the program foolproof. Hence in most cases, AI programs are only limited to the first few stages in the process while the critics decision is made by a human.

Similarly, Apple credit cards have recently discriminated against women,giving them 50% less credit than men with the same income and profile.

Let’s use Netflix as an example. Say you recently watched “Orange is the New Black.” Netflix may then suggest other shows with prison themes, or documentaries about life behind bars, or shows with a strong female lead, etc.

Based on past data (your recently watched shows and movies), it’s able to make a prediction about what you will want to watch next. Once you make your latest selection, it will adjust its algorithm to further customize your experience.

According to a 2017 scholarly article on Augmented intelligence by Researchers Zheng and Wang, Within augmented intelligence, researchers Define models according to the varied degree of AI and human influence.

- The first classification is called Human-in-the-loop hybrid-augmented intelligence) Human-in-the-loop (HITL) hybrid-augmented intelligence is defined as an intelligent model that requires human interaction. In this type of intelligent system, human is always part of the system and consequently influences the outcome in such a way that human gives further judgment if a low confident result is given by a computer. HITL hybrid-augmented intelligence also readily allows for addressing problems and requirements that may not be easily trained or classified by machine learning.

- The second classification looks at (Cognitive computing based hybrid-augmented intelligence) In general, cognitive computing (CC) based hybrid-augmented intelligence refers to new software and/or hardware that mimics the function of the human brain and improves computer’s capabilities of perception, reasoning, and decision-making. In that sense, CC based hybrid-augmented intelligence is a new framework of computing with the goal of more accurate models of how the human brain/mind senses, reasons, and responds to stimulus, especially how to build causal models, intuitive reasoning models, and associative memories in an intelligent system.

Augmented intelligence follows a five-function cadence that allows it to learn with human influence. It repeats a cycle of understanding, interpretation, reasoning, learning, and assurance. Here’s how it works:

- Understanding: Systems are fed data, which it breaks down and derives meaning from.

- Interpretation: New data is inputted; the system then reflects on old data to interpret new data sets.

- Reasoning: The system creates “output” or “results” for new data set.

- Learn: Humans give feedback on output and the system adjusts accordingly.

- Assure: Security and compliance are ensured using blockchain or AI technology.

Source: Global Data Magazine

It is clear and evident that AI is here to stay. Humans have a deep reliance on Artificial Intelligence which has been around for decades. Yet, the future of Artificial Intelligence is brightest with the humans, morphing in to Augmented Intelligence. Having humans and machines work hand-in-hand is a win-win for both parties.

Most scientists and researchers agree that its probably extremely implausible, if not impossible to imagine collective human failure to the extent that AI is allowed to grow unchecked, while simultaneously all other uses of AI beneficial to humankind are ignored.

One thing is certain though: despite the ominous predictions and warnings on doomsday scenarios, arising out of the impact of AI on humanity and society, AI will never take over the world or morph in to Terminator style Machines Or Will Smith’s I robot kind of intelligence.