Big Tech controls lives yet has difficulty controlling what it hosts!

Introduction

When Mark Zuckerberg wrote the oped in WAPO in March 2019 asking for government and regulators to step in more aggressively to police the internet, he may have elaborated what many insiders feel regarding governing internet, and specifically what content is uploaded for viewers to view, download and use. Yet, not everything seems above board here as the challenges that Mark Zuckerberg cited so eloquently in his oped are the same challenges that have plagued tech industry for years. What has changed recently that governments are being called in to action, while so far the tech industry has fought tooth and nail for freedom of expression and freedom of speech?

All content platforms and social media companies must keep the content flowing because that is the business model: Content captures attention, provides viewership and generates data (users’ statistics). The platforms then sell that attention (read: viewership), enriched by that data (read: customized ads). But how do you deal with the objectionable, disgusting, pornographic, illegal, or otherwise verboten content uploaded alongside legitimate content?

The one thing we know for sure is that you can’t do it all with computing. To examine these issues, Roberts pulled together a first-of-its-kind conference on commercial content moderation last week at UCLA. According to Roberts, “In 2017, the response by firms to incidents and critiques of these platforms is not primarily ‘We’re going to put more computational power on it,’ but ‘We’re going to put more human eyeballs on it.’”

The debate on the usage of internet and governing content uploads is not new. The debate has been going on for some time now and is just about reaching peak interest levels around the world, with many governments promising action like EU and UK; few governments, like China, in fact taking strong action; and few just watching how the entire debate pans out and what, if any, changes come out as result.

The big tech players around the world have realized one thing – it’s a tough tight rope walk to control or govern the internet. If a platform puts in too strict controls, through user-reporting mechanism, AI backed algorithms and human monitors flagging and removing content, it will get labeled as ‘dictatorship’ and against free speech. If a platform puts in too few controls, hosting content freely and with little censorship, its going to get run over by activists from all ends of the spectrum, from left to right. It’s quite like an overflowing pot left simmering for long. The only difference is no one can lift the pot and no matter which way its tilted, boiling hot contents are sure to leave scalding marks.

Image Credit: Internet

Governments should Regulate!

Technology experts, including big tech companies themselves believe that for Zuckerberg and other big tech companies, “regulation” isn’t an uncouth word anymore. As with changing times, the big tech is now embracing regulations, not because of any newfound respect for regulations but purely as a business measure. From early days when big tech companies projected all regulations as reprehensible and fought any and all regulations tooth and nail, to the current day where they are welcoming regulations, the transformation cannot be more melodramatic.

Most of big tech today sees regulations as a set of common rules enforced by governments and regulators that’ll allow them to further cement their dominance of the internet. And if anything goes wrong, they always have the comfort of pointing the finger to the” Regulator” big brother.

Zuckerberg laid out 4 broad areas for government and regulators to govern more effectively and deeply.

Harmful content

The problem: Facebook serves as a platform for its billions of regular users to post, view and offer feedback about the content hosted on its servers. But when that content is more “terrorist propaganda” than “brunch photo,” or when it becomes “porn” than “essential context” to an image, the company has struggled to determine the right approach to removing it in time. The traditional methods of company moderators reviewing user-reported infractions is too time consuming, while the AI powered algorithms are too imprecise. Zuckerberg said he agrees with lawmakers that Facebook has too much power over what constitutes free speech.

Mark’s solution: Develop a more standardized approach by setting up an independent body of reviewers who are non-Facebook employees to decide which removal ban stays and to enforce rules. Think a Supreme Court.

Objection: The harmful content can be segregated in to multiple buckets. Facebook breaks down the kind of content it’s using AI to proactively detect into seven categories: Nudity, graphic violence, terrorism, hate speech, spam, fake accounts, and suicide prevention. It is unclear if Mark is encouraging the governments and regulators to look at any one category or all of these? The problem becomes even more interesting as at least 5 out of the 7 categories are effectively policed using AI and ML techniques.

- Nudity and graphic violence: These are two very different types of content but Facebook is using improvements in computer vision to proactively remove both. Facebook’s AI backed algorithms depend on computer vision and a degree of confidence in order to determine whether or not to remove content. If the confidence is high, the content will be automatically removed; if it’s low, the system will call for manual review. The degree of confidence is further boosted by the right choices the system makes. Facebook claims that it removes 97% of violence and graphic content, and 96% of nudity before it is reported.

- Hate speech: Understanding the context of speech often requires human eyes and human understanding as the context is very, very important. Is something hateful, or is it being shared to condemn hate speech or raise awareness about it? Facebook says it has started using technology to proactively detect something that might violate its policies, starting with certain languages such as English and Portuguese. Safety teams then review the content so what’s OK stays up, for example someone describing hate they encountered to raise awareness of the problem. In a major embarrassment, Facebook was used to spread fear and hate in Myanmar against Rohingya Muslims. Today, Facebook says it has hired more language-specific content reviewers, banned individuals and organizations that have broken rules, and built new technology to make it easier for people to report violating content.

- Fake accounts: Facebook blocks millions of fake accounts every day when they are created and before they can do any harm. This is incredibly important in fighting spam, fake news, misinformation and bad ads. Recently, Facebook started using artificial intelligence to detect accounts linked to financial scams. An account reaching out to many more other accounts than usual; a large volume of activity that seems automated; and activity that doesn’t seem to originate from the geographic area associated with the account are clear warning signals. However, as a point of failure, Facebook failed to recognize several fake accounts set up by a government agency to lure fake admission seekers coming to US to stay long term without proper authorizations. Facebook did launch a complaint later with DHS about the fake accounts

- Spam: The vast majority of work fighting spam is done automatically using recognizable patterns of problematic behavior. For example, if an account is posting over and over in quick succession that’s a strong sign something is wrong.

- Terrorist propaganda: The vast majority of this content is removed automatically, without the need for someone to report it first. Facebook is proud of the way its AI systems have been able to remove most terrorist content, claiming that 99% of ISIS or Al-Qaeda content is removed even before being reported by users.

- Suicide prevention: As explained above, Facebook proactively identifies posts which might show that people are at risk so that they can get help. Facebook claims it passed on information for thousands of suicide attempts to local first responders.

Image Credit: Internet

Protecting elections

The problem: Misinformation campaigns (aka “fake news”) on Facebook have interfered with democratic elections around the world. But as the company tries to provide more transparency, it’s having trouble classifying what should or shouldn’t be considered “political.” To be fair to tech companies, censorship is like walking a double-edged sword.

Mark’s solution: Let governments set common standards for verifying political actors. The NYT’s Mike Isaac thinks this could work: “When the next erroneous outburst inevitably occurs, Facebook could point toward the law it was forced to follow.”

Objection: Facebook has comprehensively shown it can tackle complicated political situations. In the past, the company has complied with requests from leaders of Vietnam and other countries to censor content critical of those governments. In P.R. China, Facebook reportedly created a censorship tool that suppresses posts for users in certain geographies as a way to potentially work with the government. In many western countries, the company has reportedly used the same technology it uses to identify copyrighted videos to identify and remove ISIS recruitment material. Further, Facebook claims that it has developed special programs to give people more information about the ads they see. These special features are since expanded to Brazil and the UK, and will soon in India.

Image Credit: The Verge

Privacy -:

The problem: The Cambridge Analytica scandal revealed that Facebook was playing fast and loose with user data. The fine legal print an user electronically signs has often given platforms incredible leeway over how they store and use users’ data while subjected to little or no regulations over how to use the data. There have been numerous breaches as companies have paid little attention to keeping the users’ data secure. To top it all, tech companies have been known to sell the data or allow usage for shady purposes. Worse of all, there are no effective laws in place to prevent misuse and seek preventive and corective actions. New privacy regulations, like Europe’s GDPR law, have come into effect…but that’s leading to an increasingly fragmented internet.

Mark’s solution: A global privacy framework à la GDPR.

Objection: Facebook and its affiliates through many data deals with parties having need to access users’ data have shown that commercial interests have often succeeded over the privacy concerns. Global frameworks like what Mark has suggested can take years to develop in best case scenario and yet not be applicable uniformly. Mark realizes that this is a gamble he is more than willing to play as its unclear who’s the regulator for a global policy framework?

Image Credit: The Verge

Data portability -:

The problem: Should you, as an internet user, be able to freely move your personal info from one service to another?

Mark’s solution: Yes. (Easier to say when he owns WhatsApp, Instagram, and Messenger.)

Objection: Again, a solution that serves Facebook and big tech’s own interest is hardly breaking news!

Facebook content moderators in action

Image Credit: Internet

Monika Bickert and her entire trust and Safety team at Facebook are focused to make sure that their moderators get it right while reviewing the content. There are 60 people dedicated just to crafting the policies for the company’s 15,000 content moderators. These policies are not available publicly anywhere, as these are sensitive and form the “book” which is used by safety teams and the content moderators to refer to when doing their jobs. These policies and definitions of common terms, often nuanced to the level of slangs used in local languages, are revised every two weeks to keep pace with changing ways of how people interact and speak. These sessions are often referred to as mini-legislative sessions, as different teams across the company — engineering, legal, content reviewers, external partners like nonprofit groups — provide recommendations to Bickert’s team for inclusion in the policy guidebook.

Many Facebook insiders like Neil Potts emphasize the similarity between what Facebook is doing and what government does. “We do really share the goals of government in certain ways,” he said. “If the goals of government are to protect their constituents, which are our users and community, I think we do share that. I feel comfortable going to the press with that.”

Image Credit: The Verge

Heart of the matter

In many ways, the change of heart across global tech landscape has resulted from the difficulties the platforms faced while trying to control and govern the harmful content uploaded on the internet’s content platforms. As the efforts to govern the internet continue, many who are fighting the battle daily are coming to realize the magnitude of difficulty this seemingly simple question of ‘what content to be allowed’ poses. Lets face it: Internet was never known to be deferential to peoples’ preferences. The advocates of freedom of expression and free speech, often big tech companies themselves, fought for as little government control as possible, decrying every move made by governments or regulators around the world.

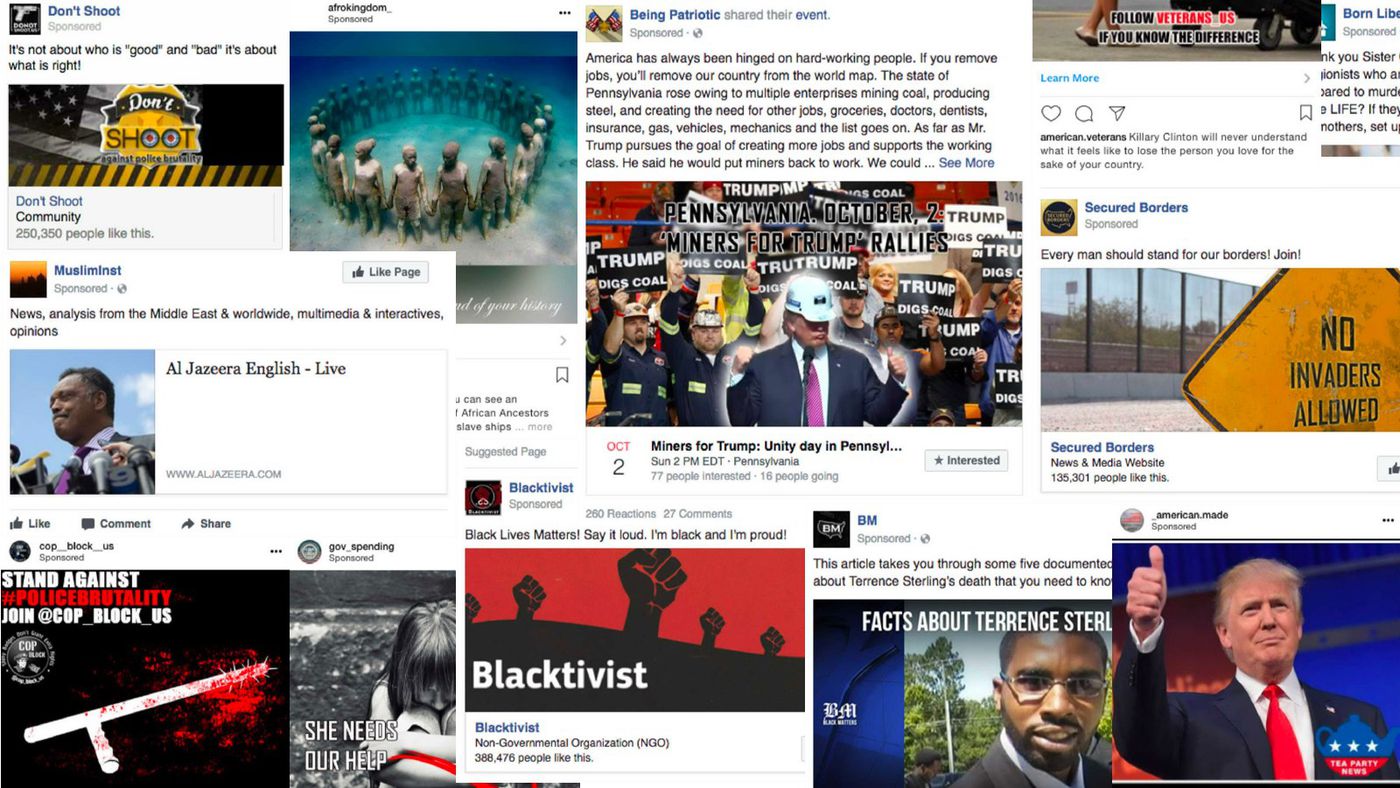

Image Credit: Internet

Post the 2016 US presidential elections, when the investigations spread in to how agents posing as fake advertisers used social media platforms as a medium to spread biased propaganda to favor one side, the debate around transparency in social media, controls over content available on the internet and the influence on society converged. Google, Facebook, and Twitter were identified as primary targets of these foreign-state sponsored actors as the three companies sat through multiple congressional hearings last year to discuss how foreign agents used and abused their platforms to manipulate Americans. In the aftermath, Facebook tried desperately and failed to fix its fake news problem while it simultaneously reckoned with group of Russian government–backed agents masquerading as advocacy organizations in order to keep pushing socially divisive political messages. Facebook and Google had to publicly apologize and indirectly admit lax controls after it was revealed that advertisers (read: fake) could use their platforms to target a sub-section of society, or people in any age group or ethnicity, based on racist and bigoted interests—like “threesome rape” and “Jews ruin the world.”

Facebook hasn’t always taken content review as seriously as they do now. When Facebook Live launched, the technical tool to review the videos did not show where or which part of a video reported for review tended to generate user flags. So, if a Facebook Live video was reported or flagged for inappropriate content, and it was two hours long, the reviewers had to try to skim through entire two hours of content to figure out where the objectionable material might be. Of course, that’s during the pre-election good-old days.

Dangerous fake news has spread on platforms like Facebook in Myanmar, where the Rohingya ethnic minority are persecuted. United Nations has clearly blamed the role of social media in spreading the persecution and this is not the only example of its kind.

After a man used Facebook to live stream his attack on two New Zealand mosques in March 2019, the video quickly spread. YouTube Moderators fought back hard taking down the video as newer versions kept popping up seemingly beating the controls that YouTube has in place to immediately flag already removed material. The uploaders were able to sneak past by using a loophole – exact re-uploads of the video are banned by YouTube, however videos that contain clips of the original footage must be sent to human moderators for review, thereby delaying the process. And again this loophole existed for a purely legitimate reason – to ensure that news videos that use a portion of the video for their segments aren’t removed in the process.

Image Credit: Internet

YouTube today employs an expected 12 – 14,000 people, while Facebook now has over 30,000 people working on safety and security — about half of them are content reviewers working out of 20 offices around the world. Similarly, Twitter employees close to 4,000 people in its content review team. Facebook also announced that approx. 1,000 people will work on election focused advertisements alone to prevent the likelihood of the company getting used by false advertisers again.

In addition to the manpower, the promised marvels of cutting edge technology, a.k.a. powerful AI based algorithms written specifically to identify, target, and take down or report the harmful content have been, at best under-performers and at worst, simple waste of money and time. And lets not forget this. Facebook used AI backed technology and had an approx. 5,000 strong team of content reviewers before the 2016 elections. Yet it got itself in to significant trouble and scrutiny. Facebook now claims that it has invested record amounts in the past year in keeping people safe and strengthening its defenses against abuse. Facebook also states that its policies have provided people with far more control over their information and more transparency into policies, operations and ads.

Image Credit: Vox

Facebook also claims that amid all the efforts to control content, it has also started rolling out a content appeals process for users inquiring about their own content that’s been removed. The announcement for content appeals process came much earlier, almost like building hype around the topic is more important than the topic itself. The company said it will expand on this concept so users can appeal decision on reports filed on other people’s content. In addition, Facebook said it is training AI to detect and reduce the spread of “borderline content,” which it describes as “click-bait and misinformation.”

The content appeals process announced in 2018 was not a voluntary act of kindness at Facebook, either. Many believe that this is a dual pronged strategy as Facebook executives try to recover their public image. Firstly, this is in response to the increased global scrutiny of how content is treated, sanctioned or legitimized by content platforms. This is also in part due to the lens Facebook has come under especially since The Guardian published a leaked copy of Facebook’s content moderation guidelines, which describe the company’s policies for determining whether posts should be removed from the service. Secondly, Facebook executives hope that such measures would be seen as increasing transparency and fairness, while the opposite is actually true. Moreover, any damage resulting out of such transparent practices will pale in comparison to the risks Facebook will avert.

According to Guy Rosen, VP of Product Management at Facebook, “Artificial intelligence is very promising but we are still years away from it being effective for all kinds of bad content because context is so important. That’s why we have people still reviewing reports.” At Facebook, Guy continues, “we continue to train our software system by analyzing specific examples of bad content that have been reported and removed to identify patterns of behavior. These patterns can then be used to teach our software to proactively find other, similar problems.” However these revamped efforts came after multiple reports of algorithms which failed worldwide to identify news with harmful content, instead promoting content inconsistent with the platform’s terms of service, like a man performing sexual acts on a chicken sandwich.

Lastly, a look at the role of much touted content moderator has thrown up unmitigated and unexpected, yet serious concerns. What good is a job if it takes away from the professional much more than what it compensates for. What good is a job if it adds more to the healthcare costs and leads to destruction of professional and personal lives? The job of content reviewer itself has come under criticism from many angles. In the recent past, a former Facebook moderator sued, accusing the platform of psychological harm. Former Microsoft employees sued Microsoft for similar reasons after the alleged trauma from reviewing child porn. In a more recent report, The Verge carried out a scathing review of the job conditions for content moderators at Facebook and the harrowing conditions surrounding the job in general. As one employee interviewed in the report put it: “We were doing something that was darkening our soul — or whatever you call it,” he says. “What else do you do at that point? The one thing that makes us laugh is actually damaging us. I had to watch myself when I was joking around in public. I would accidentally say [offensive] things all the time — and then be like, Oh shit, I’m at the grocery store. I cannot be talking like this.”

Image Credit: Internet

An NPR report sheds light on the activities of content moderators as 15,000 moderators contracted by the company worldwide spend their workday wading through racism, conspiracy theories and violence. In the wake of the original story carried out by the Verge, the attention has shifted to work conditions for content moderators. However this attention is not expected to bring about any big results as there are little alternatives to the practice of hiring content moderators to sift through mountains of content daily. As Newton cites in his story, that number is just short of half of the 30,000-plus employees Facebook hired by the end of 2018 to work on safety and security.

In a related CNN report, “It’s not really clear what the ideal circumstance would be for a human being to do this work,” said Sarah T. Roberts, an assistant professor of information studies at UCLA, who has been sounding the alarm about the work and conditions of content moderators for years.

Facebook would rather talk about its advancements in artificial intelligence, and dangle the prospect that its reliance on human moderators will decline over time. However that’s not going to happen anytime soon as the AI based system isn’t fully ready or dependable yet, hence the pressure is back on humans at least for the foreseeable future.

Image Credit: Internet

Conclusion

The roles of government and regulators and the big tech companies have often overlapped. In today’s context however, given the amount of data users are generating, the roles have blurred to some extent. However critical differences have always existed.

The big tech companies need to come out of their over-leveraged positions and take due responsibility for the content they host, support and spread. It is not going to be the end of the world if content platforms become more aggressive, nor is it a matter of financial survival as the tech giants have more cash-in-hand than many smaller countries.

So, the standoff between AI, human moderators, content platforms hiring the first two, and the target itself – harmful content, continues in to the future. Merely throwing money at the problem can be effective to silence critics in short-term, however long-term improvement plans warrant much more. For one though, the Big Tech has been short of making long term commitments and they have been consistent

Image Credit: Internet